Unlocking the Power of Reuters Scraping: Techniques, Applications, and Ethical Considerations

Discover the essentials of Reuters scraping, from tools and techniques to real-world applications.

Discover the essentials of Reuters scraping, from tools and techniques to real-world applications. Learn how to ethically and legally harness this valuable data source for financial analysis, research, and business intelligence.

Among the various sources of information, Reuters stands out as a beacon of reliability and breadth, providing news from every corner of the globe. For developers, researchers, and businesses looking to tap into this wealth of information, Reuters scraping has become an essential tool. However, this practice is not without its challenges and ethical considerations. In this blog post, we will explore the nuances of Reuters scraping, its applications, and the ethical landscape surrounding it.

What is Web Scraping?

Web scraping involves extracting data from websites using automated tools. It’s akin to mining for precious metals, but instead of gold or silver, the target is valuable data. Web scraping scripts or bots traverse the web, collecting information that can be used for various purposes, from market analysis to academic research.

Understanding Reuters

Reuters, founded in 1851, has grown into one of the most trusted news organizations globally. With a vast network of journalists and correspondents, it provides real-time news on a wide range of topics, including politics, finance, technology, and more. For many, accessing Reuters’ rich database can provide a competitive edge, making the practice of Reuters scraping particularly appealing.

The Mechanics of Reuters Scraping

Tools and Techniques

- Python Libraries:

- Beautiful Soup and Scrapy are popular Python libraries used for web scraping. Beautiful Soup parses HTML and XML documents, making it easy to navigate and search the parse tree. Scrapy, on the other hand, is a more robust framework that allows for large-scale scraping projects.

- APIs:

- While scraping can involve directly extracting data from web pages, many websites, including Reuters, offer APIs. These APIs provide a structured way to access data, often with more stability and less risk of legal issues.

- Headless Browsers:

- Tools like Puppeteer and Selenium use headless browsers to interact with web pages just like a real user would. This can be particularly useful for scraping dynamic content that relies on JavaScript.

Step-by-Step Guide for Reuters Scraping

- Identify the Data:

- Determine what information you need. Are you looking for financial news, market trends, or global events?

- Set Up Your Environment:

- Install necessary libraries (e.g., Beautiful Soup, Scrapy) and set up your development environment.

- Request Access:

- Check if Reuters offers an API for your needs. If not, use web scraping tools to access the data.

- Scrape the Data:

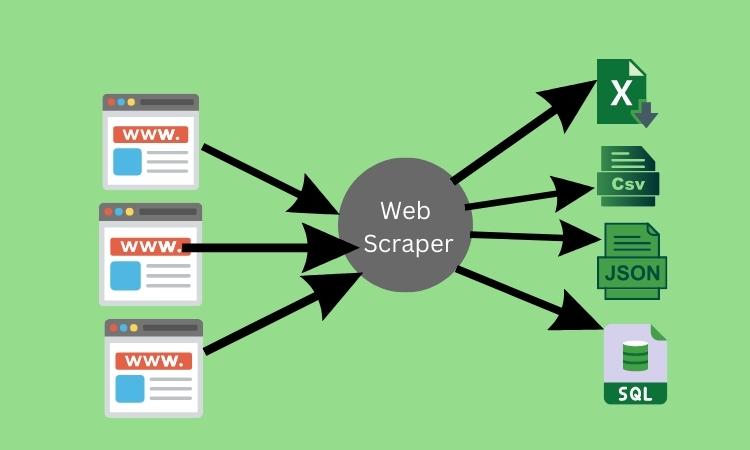

- Write scripts to navigate to the required pages, extract the necessary information, and store it in a structured format like CSV or a database.

- Data Cleaning and Analysis:

- Raw data often needs cleaning and preprocessing. Use libraries like Pandas in Python for this purpose.

- Ethical Considerations:

- Ensure your scraping practices comply with Reuters’ terms of service and legal guidelines.

Applications of Reuters Scraping

Financial Market Analysis

Financial analysts and hedge funds leverage Reuters scraping to gather real-time news and historical data. This information helps in making informed decisions, predicting market trends, and executing trades based on the latest news.

Academic Research

Researchers in fields like political science, economics, and media studies use data from Reuters to analyze trends, study media bias, and track the development of global events over time.

Business Intelligence

Companies use Reuters scraping to keep an eye on competitors, monitor industry trends, and gather insights for strategic planning. This real-time data can be crucial for staying ahead in a competitive market.

Content Aggregation

News aggregators and content curators scrape Reuters to provide comprehensive news feeds to their users. By combining data from multiple sources, they offer a more rounded perspective on current events.

Legal nd Ethical Considerations

While Reuters scraping offers significant benefits, it also raises ethical and legal concerns that cannot be overlooked.

Terms of Service

Most websites, including Reuters, have terms of service that outline acceptable use. Scraping without permission can violate these terms, leading to potential legal action.

Intellectual Property

Content on Reuters is protected by intellectual property laws. Scraping and republishing this content without proper attribution or permission can infringe on these rights.

Server Load and Blocking

Frequent scraping can put a strain on a website’s servers, potentially affecting its performance for other users. Websites may implement measures to detect and block scraping attempts, such as rate limiting and CAPTCHAs.

Privacy Concerns

While scraping public news data generally does not involve personal information, any scraping activity must still consider privacy laws and regulations, especially when dealing with data that could potentially identify individuals.

Read Also: Best Tripadvisor Data Scraping Techniques

Best Practices for Ethical Scraping

- Respect Robots.txt:

- Most websites have a robots.txt file that indicates which parts of the site can be crawled by bots. Adhere to these guidelines.

- Use APIs When Available:

- Always prefer using an API over direct scraping if one is available. APIs are designed for structured data access and usually come with clear usage policies.

- Rate Limiting:

- Implement rate limiting in your scraping scripts to avoid overwhelming the server. This involves setting delays between requests.

- Attribution:

- Always attribute the source of your data. If you are using Reuters’ data, make it clear where the information came from.

- Compliance with Legal Requirements:

- Stay informed about legal requirements related to web scraping and ensure your practices comply with relevant laws and regulations.

FAQs

How to extract data from Reuters?

To extract data from Reuters, you can use web scraping tools like Beautiful Soup or Scrapy, or access data through Reuters’ API if available. Ensure you comply with their terms of service and legal guidelines.

Does Reuters have an API?

Yes, Reuters offers APIs that provide structured access to their news and data. These APIs are designed for developers to integrate Reuters content into their applications.

What is the Reuters dataset?

A Reuters dataset is a collection of news articles and financial data provided by Reuters. These datasets are used for various purposes, including market analysis, academic research, and business intelligence.

Conclusion

Reuters scraping presents a valuable opportunity to access a vast array of information for various applications, from financial analysis to academic research. However, this practice must be approached with a clear understanding of the ethical and legal landscape. By respecting terms of service, adhering to intellectual property laws, and following best practices, individuals and organizations can responsibly leverage the power of Reuters scraping.